Let’s pour one out for an old friend.

AWS recently announced a small, seemingly boring new feature for EC2 Auto Scaling: the ability to cancel a pending instance refresh. If you squinted, you might have missed it. It sounds like a minor quality-of-life update, something to make a sysadmin’s Tuesday slightly less terrible.

But this isn’t a feature. It’s a gold watch. It’s the pat on the back and the “thanks for your service” speech at the awkward retirement party.

The EC2 Auto Scaling Group (ASG), the bedrock of cloud elasticity, the one tool we all reflexively reached for, is being quietly put out to pasture.

No, AWS hasn’t officially killed it. You can still spin one up, just like you can still technically send a fax. AWS will happily support it. But its days as the default, go-to solution for modern workloads are decisively over. The battle for the future of scaling has ended, and the ASG wasn’t the winner. The new default is serverless containers, hyper-optimized Spot fleets, and platforms so abstract they’re practically invisible.

If you’re still building your infrastructure around the ASG, you’re building a brand-new house with plumbing from 1985. It’s time to talk about why our old friend is retiring and meet the eager new hires who are already measuring the drapes in its office.

So why is the ASG getting the boot?

We loved the ASG. It was a revolutionary idea. But like that one brilliant relative everyone dreads sitting next to at dinner, it was also exhausting. Its retirement was long overdue, and the reasons are the same frustrations we’ve all been quietly grumbling about into our coffee for years.

It promised automation but gave us chores

The ASG’s sales pitch was simple: “I’ll handle the scaling!” But that promise came with a three-page, fine-print addendum of chores.

It was the operational overhead that killed us. We were promised a self-driving car and ended up with a stick-shift that required constant, neurotic supervision. We became part-time Launch Template librarians, meticulously versioning every tiny change. We became health-check philosophers, endlessly debating the finer points of ELB vs. EC2 health checks.

And then… the Lifecycle Hooks.

A “Lifecycle Hook” is a polite, clinical term for a Rube Goldberg machine of desperation. It’s a panic button that triggers a Lambda, which calls a Systems Manager script, which sends a carrier pigeon to… maybe… drain a connection pool before the instance is ruthlessly terminated. Trying to debug one at 3 AM was a rite of passage, a surefire way to lose precious engineering time and a little bit of your soul.

It moves at a glacial pace

The second nail in the coffin was its speed. Or rather, the complete lack of it.

The ASG scales at the speed of a full VM boot. In our world of spiky, unpredictable traffic, that’s an eternity. It’s like pre-heating a giant, industrial pizza oven for 45 minutes just to toast a single slice of bread. By the time your new instance is booted, configured, service-discovered, and finally “InService,” the spike in traffic has already come and gone, leaving you with a bigger bill and a cohort of very annoyed users.

It’s an expensive insurance policy

The ASG model is fundamentally wasteful. You run a “warm” fleet, paying for idle capacity just in case you need it. It’s like paying rent on a 5-bedroom house for your family of three, just in case 30 cousins decide to visit unannounced.

This “scale-up” model was slow, and the “scale-down” was even worse, riddled with fears of terminating the wrong instance and triggering a cascading failure. We ended up over-provisioning to avoid the pain of scaling, which completely defeats the purpose of “auto-scaling.”

The eager interns taking over the desk

So, the ASG has cleared out its desk. Who’s moving in? It turns out there’s a whole line of replacements, each one leaner, faster, and blissfully unconcerned with managing a “fleet.”

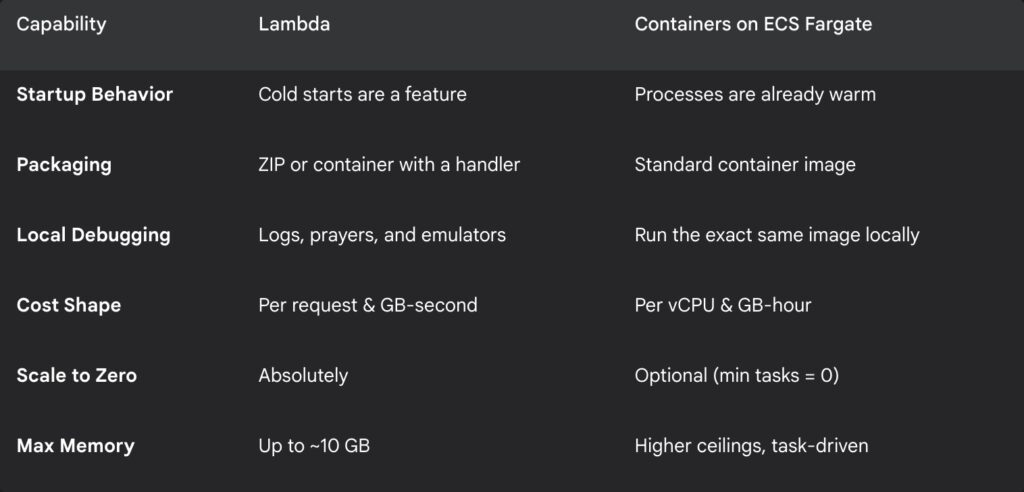

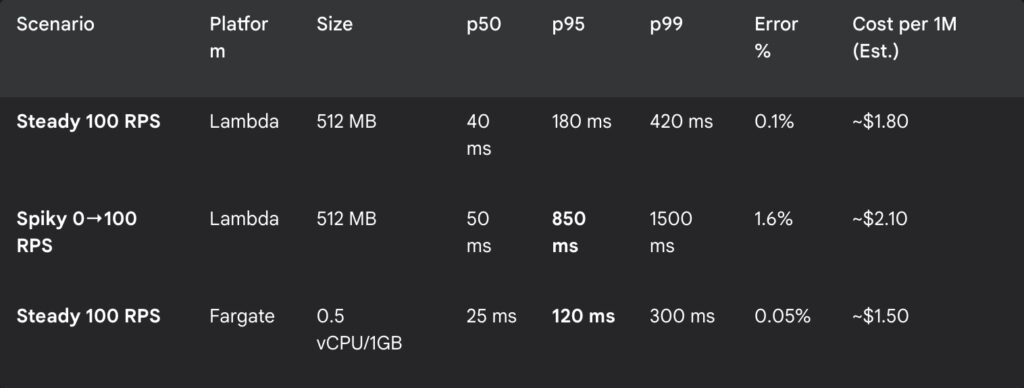

1. The appliance Fargate and Cloud Run

First up is the “serverless container”. This is the hyper-efficient new hire who just says, “Give me the Dockerfile. I’ll handle the rest.”

With AWS Fargate or Google’s Cloud Run, you don’t have a fleet. You don’t manage VMs. You don’t patch operating systems. You don’t even think about an instance. You just define a task, give it some CPU and memory, and tell it how many copies you want. It scales from zero to a thousand in seconds.

This is the appliance model. When you buy a toaster, you don’t worry about wiring the heating elements or managing its power supply. You just put in bread and get toast. Fargate is the toaster. The ASG was the “build-your-own-toaster” kit that came with a 200-page manual on electrical engineering.

Just look at the cognitive load. This is what it takes to get a basic ASG running via the CLI:

# The "Old Way": Just one of the many steps...

aws autoscaling create-auto-scaling-group \

--auto-scaling-group-name my-legacy-asg \

--launch-template "LaunchTemplateName=my-launch-template,Version='1'" \

--min-size 1 \

--max-size 5 \

--desired-capacity 2 \

--vpc-zone-identifier "subnet-0571c54b67EXAMPLE,subnet-0c1f4e4776EXAMPLE" \

--health-check-type ELB \

--health-check-grace-period 300 \

--tag "Key=Name,Value=My-ASG-Instance,PropagateAtLaunch=true"You still need to define the launch template, the subnets, the load balancer, the health checks…

Now, here’s the core of a Fargate task definition. It’s just a simple JSON file:

// The "New Way": A snippet from a Fargate Task Definition

{

"family": "my-modern-app",

"containerDefinitions": [

{

"name": "my-container",

"image": "nginx:latest",

"cpu": 256,

"memory": 512,

"portMappings": [

{

"containerPort": 80,

"hostPort": 80

}

]

}

],

"requiresCompatibilities": ["FARGATE"],

"cpu": "256",

"memory": "512"

}You define what you need, and the platform handles everything else.

2. The extreme couponer Spot fleets

For workloads that are less “instant spike” and more “giant batch job,” we have the “optimized fleet”. This is the high-stakes, high-reward world of Spot Instances.

Spot used to be terrifying. AWS could pull the plug with two minutes’ notice, and your entire workload would evaporate. But now, with Spot Fleets and diversification, it’s the smartest tool in the box. You can tell AWS, “I need 1,000 vCPUs, and I don’t care what instance types you give me, just find the cheapest ones.”

The platform then builds a diversified fleet for you across multiple instance types and Availability Zones, making it incredibly resilient to any single Spot pool termination. It’s perfect for data processing, CI/CD runners, and any batch job that can be interrupted and resumed. The ASG was always too rigid for this kind of dynamic, cost-driven scaling.

3. The paranoid security guard MicroVMs

Then there’s the truly weird stuff: Firecracker. This is the technology that powers AWS Lambda and Fargate. It’s a “MicroVM” that gives you the iron-clad security isolation of a full virtual machine but with the lightning-fast startup speed of a container.

We’re talking boot times of under 125 milliseconds. This is for when you need to run thousands of tiny, separate, untrusted workloads simultaneously without them ever being able to see each other. It’s the ultimate “multi-tenant” dream, giving every user their own tiny, disposable, fire-walled VM in the blink of an eye.

4. The invisible platform Edge runtimes

Finally, we have the platforms that are so abstract they’re “scaled to invisibility”. This is the world of Edge. Think Lambda@Edge or CloudFront Functions.

With these, you’re not even scaling in a region anymore. Your logic, your code, is automatically replicated and executed at hundreds of Points of Presence around the globe, as close to the end-user as possible. The entire concept of a “fleet” or “instance” just… disappears. The logic scales with the request.

Life after the funeral. How to adapt

Okay, the eulogy is over. The ASG is in its rocking chair on the porch. What does this mean for us, the builders? It’s time to sort through the old belongings and modernize the house.

Go full Marie Kondo on your architecture

First, you need to re-evaluate. Open up your AWS console and take a hard look at every single ASG you’re running. Be honest. Ask the tough questions:

- Does this workload really need to be stateful?

- Do I really need VM-level control, or am I just clinging to it for comfort?

- Is this a stateless web app that I’ve just been too lazy to containerize?

If it doesn’t spark joy (or isn’t a snowflake legacy app that’s impossible to change), thank it for its service and plan its migration.

Stop shopping for engines, start shopping for cars

The most important shift is this: Pick the runtime, not the infrastructure.

For too long, our first question was, “What EC2 instance type do I need?” That’s the wrong question. That’s like trying to build a new car by starting at the hardware store to buy pistons.

The right question is, “What’s the best runtime for my workload?”

- Is it a simple, event-driven piece of logic? That’s a Function (Lambda).

- Is it a stateless web app in a container? That’s a Serverless Container (Fargate).

- Is it a massive, interruptible batch job? That’s an Optimized Fleet (Spot).

- Is it a cranky, stateful monolith that needs a pet VM? Only then do you fall back to an Instance (EC2, maybe even with an ASG).

Automate logic, not instance counts

Your job is no longer to be a VM mechanic. Your team’s skills need to shift. Stop manually tuning desired_capacity and start designing event-driven systems.

Focus on scaling logic, not servers. Your scaling trigger shouldn’t be “CPU is at 80%.” It should be “The SQS queue depth is greater than 100” or “API latency just breached 200ms”. Let the platform, be it Lambda, Fargate, or a KEDA-powered Kubernetes cluster, figure out how to add more processing power.

Was it really better in the old days?

Of course, this move to abstraction isn’t without trade-offs. We’re gaining a lot, but we’re also losing something.

The gain is obvious: We get our nights and weekends back. We get drastically reduced operational overhead, faster scaling, and for most stateless workloads, a much lower bill.

The loss is control. You can’t SSH into a Fargate container. You can’t run a custom kernel module on Lambda. For those few, truly special, high-customization legacy workloads, this is a dealbreaker. They will be the ASG’s loyal companions in the retirement home.

But for everything else? The ASG is a relic. It was a brilliant, necessary solution for the problems of 2010. But the problems of 2025 and beyond are different. The cloud has evolved to scale logic, functions, and containers, not just nodes.

The king isn’t just dead. The very concept of a throne has been replaced by a highly efficient, distributed, and slightly impersonal serverless committee. And frankly, it’s about time.